Huggingface tokenizers

Big shoutout to rlrs for the fast replace normalizers PR. This boosts the performances of the tokenizers:, huggingface tokenizers. Full Changelog : v0. Reworks the release pipeline.

A tokenizer is in charge of preparing the inputs for a model. The library contains tokenizers for all the models. Inherits from PreTrainedTokenizerBase. The value of this argument defines the number of overlapping tokens. If set to True , the tokenizer assumes the input is already split into words for instance, by splitting it on whitespace which it will tokenize. This is useful for NER or token classification.

Huggingface tokenizers

Released: Feb 12, View statistics for this project via Libraries. Provides an implementation of today's most used tokenizers, with a focus on performance and versatility. Bindings over the Rust implementation. If you are interested in the High-level design, you can go check it there. We provide some pre-build tokenizers to cover the most common cases. You can easily load one of these using some vocab. Whenever these provided tokenizers don't give you enough freedom, you can build your own tokenizer, by putting all the different parts you need together. You can check how we implemented the provided tokenizers and adapt them easily to your own needs. Here is an example showing how to build your own byte-level BPE by putting all the different pieces together, and then saving it to a single file:.

Jun huggingface tokenizers, Course Events. The second step is to convert those tokens into numbers, so we can build a tensor out of them and feed them to the model.

Tokenizers are one of the core components of the NLP pipeline. They serve one purpose: to translate text into data that can be processed by the model. Models can only process numbers, so tokenizers need to convert our text inputs to numerical data. In NLP tasks, the data that is generally processed is raw text. However, models can only process numbers, so we need to find a way to convert the raw text to numbers. The goal is to find the most meaningful representation — that is, the one that makes the most sense to the model — and, if possible, the smallest representation.

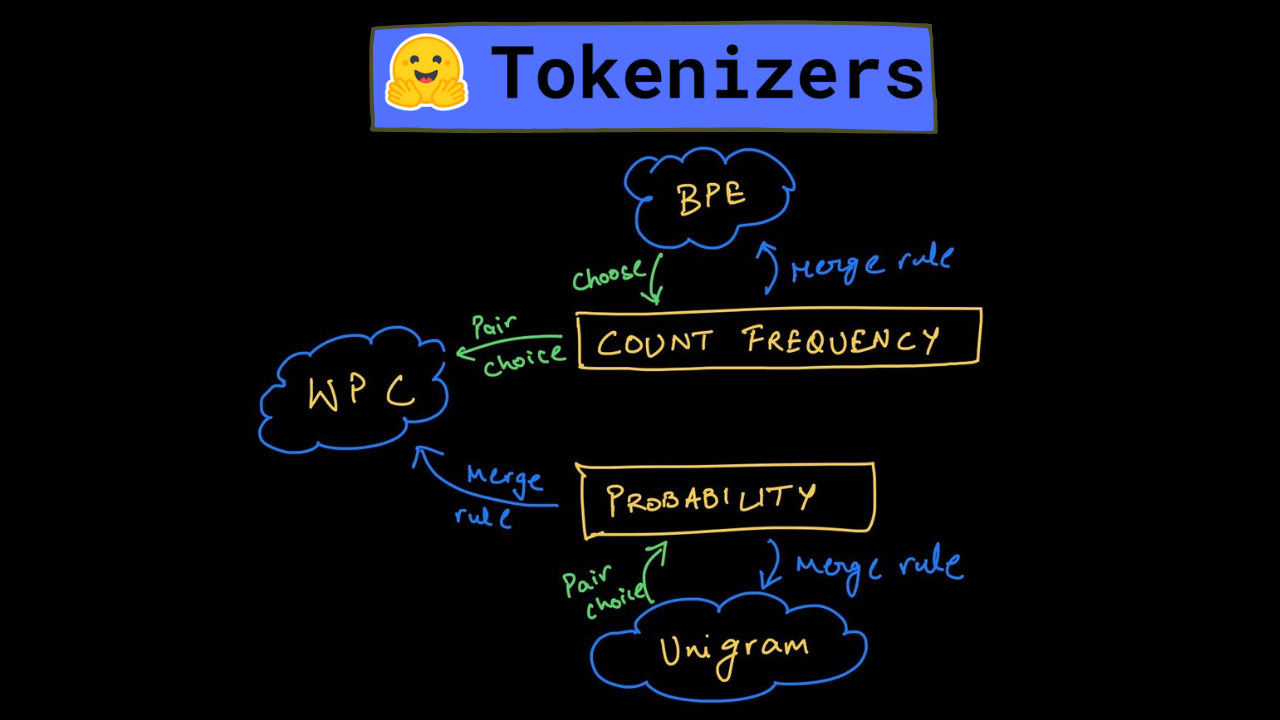

As we saw in the preprocessing tutorial , tokenizing a text is splitting it into words or subwords, which then are converted to ids through a look-up table. Converting words or subwords to ids is straightforward, so in this summary, we will focus on splitting a text into words or subwords i. Note that on each model page, you can look at the documentation of the associated tokenizer to know which tokenizer type was used by the pretrained model. For instance, if we look at BertTokenizer , we can see that the model uses WordPiece. Splitting a text into smaller chunks is a task that is harder than it looks, and there are multiple ways of doing so. We sure do. A simple way of tokenizing this text is to split it by spaces, which would give:. This is a sensible first step, but if we look at the tokens "Transformers? We should take the punctuation into account so that a model does not have to learn a different representation of a word and every possible punctuation symbol that could follow it, which would explode the number of representations the model has to learn.

Huggingface tokenizers

When calling Tokenizer. For the examples that require a Tokenizer we will use the tokenizer we trained in the quicktour , which you can load with:. Common operations include stripping whitespace, removing accented characters or lowercasing all text. Here is a normalizer applying NFD Unicode normalization and removing accents as an example:. When building a Tokenizer , you can customize its normalizer by just changing the corresponding attribute:. Of course, if you change the way a tokenizer applies normalization, you should probably retrain it from scratch afterward.

The slap com victorious login

You can easily load one of these using some vocab. Similar to AutoModel , the AutoTokenizer class will grab the proper tokenizer class in the library based on the checkpoint name, and can be used directly with any checkpoint:. Note that the desired vocabulary size is a hyperparameter to define before training the tokenizer. AddedToken , optional — A special token separating two different sentences in the same input used by BERT for instance. A list indicating the word corresponding to each token. Splitting a text into smaller chunks is a task that is harder than it looks, and there are multiple ways of doing so. Note, when adding new tokens to the vocabulary, you should make sure to also resize the token embedding matrix of the model so that its embedding matrix matches the tokenizer. Same as doing self. What are attention masks? Will then be ignored by attention mechanisms or loss computation.

A tokenizer is in charge of preparing the inputs for a model. The library contains tokenizers for all the models. Inherits from PreTrainedTokenizerBase.

Inherits from PreTrainedTokenizerBase. Unigram saves the probability of each token in the training corpus on top of saving the vocabulary so that the probability of each possible tokenization can be computed after training. There are different ways to split the text. Thus, the first merge rule the tokenizer learns is to group all "u" symbols followed by a "g" symbol together. Depending on the rules we apply for tokenizing a text, a different tokenized output is generated for the same text. Each sequence can be a string or a list of strings pretokenized string. Applying them on our example, spaCy and Moses would output something like:. You should now have sufficient knowledge of how tokenizers work to get started with the API. Should be a generator of batches of texts, for instance a list of lists of texts if you have everything in memory. Time series models. Close Hashes for tokenizers

I confirm. So happens. We can communicate on this theme. Here or in PM.

Absolutely with you it agree. In it something is also to me this idea is pleasant, I completely with you agree.

There is a site on a question interesting you.