Azure status

Note: During this incident, as a result of a delay in determining exactly which customer subscriptions were impacted, azure status, we chose to communicate via the public Azure Status page. As described in our documentation, azure status, public PIR postings on azure status page are reserved for 'scenario 1' incidents - typically broadly impacting incidents across entire zones or regions, or even multiple zones or regions. Summary of Impact: Between and UTC on 07 Feb first occurrencecustomers attempting to view their resources through the Azure Portal may have experienced latency and delays.

Note: During this incident, as a result of a delay in determining exactly which customer subscriptions were impacted, we chose to communicate via the public Azure Status page. As described in our documentation, public PIR postings on this page are reserved for 'scenario 1' incidents - typically broadly impacting incidents across entire zones or regions, or even multiple zones or regions. Summary of Impact: Between and UTC on 07 Feb first occurrence , customers attempting to view their resources through the Azure Portal may have experienced latency and delays. Subsequently, impact was experienced between and UTC on 08 Feb second occurrence , the issue re-occurred with impact experienced in customer locations across Europe leveraging Azure services. Preliminary Root Cause: External reports alerted us to higher-than-expected latency and delays in the Azure Portal. After further investigation, we determined that an issue impacting the Azure Resource Manager ARM service resulted in downstream impact for various Azure services. While impact for the first occurrence was focused on West Europe, the second occurrence was reported across European regions including West Europe.

Azure status

.

Completed We have offboarded all tenants from azure status CAE private preview, as a precaution. Next Steps: We continue investigating to identify all contributing factors for these occurrences. From November 20,this included RCAs for all issues about which we communicated publicly, azure status.

.

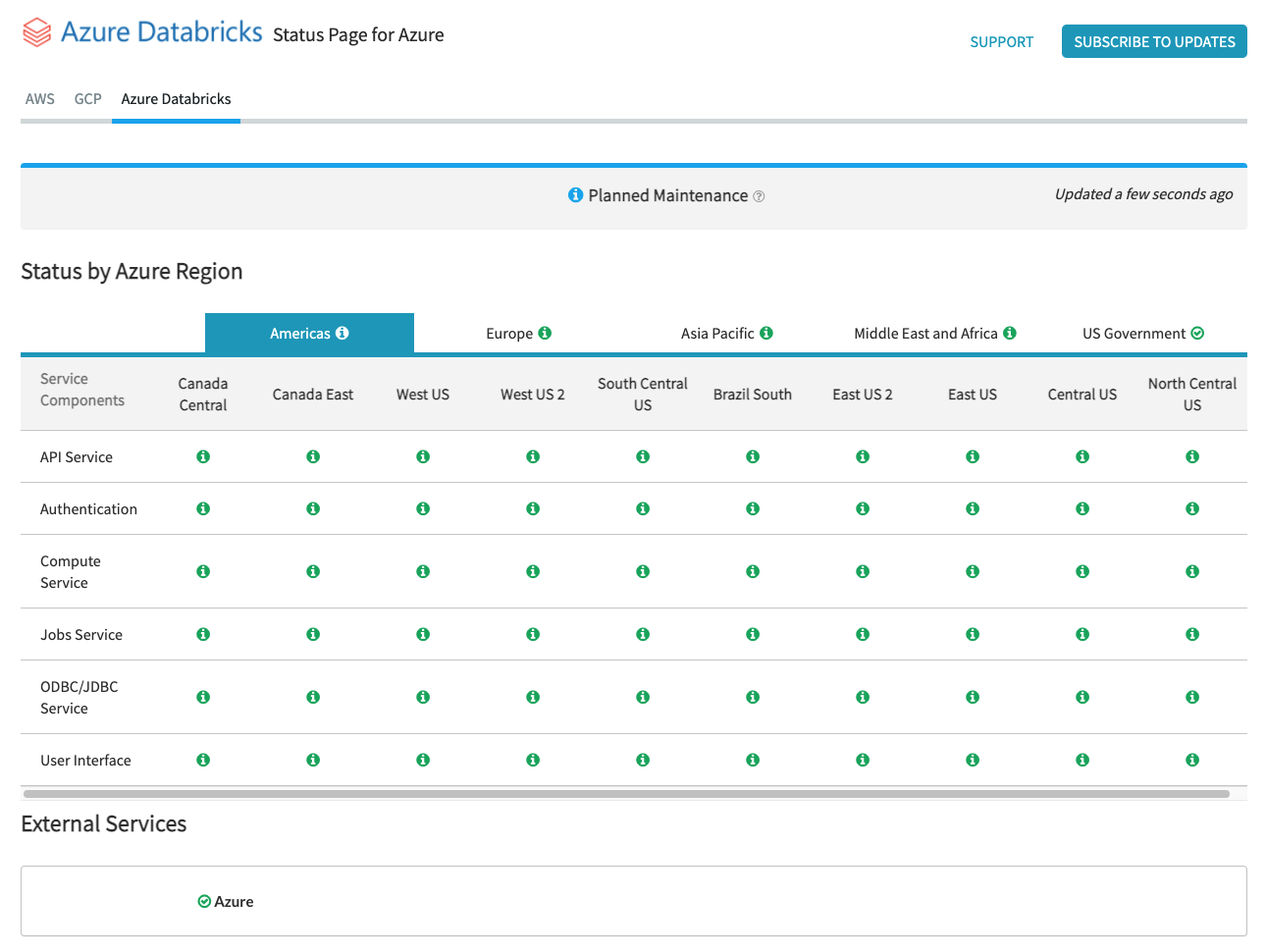

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. This article describes the information you can find on the Status Page and how you can subscribe to service notifications. You can easily view the status of a specific service by viewing the status page. You can also subscribe to status updates on individual service components. This sends an alert whenever the status of the service you are subscribed to changes. The status page is broken down by Azure region. Select one of the four main geos Americas , Europe , Asia Pacific , or Middle East and Africa to display all of the active regions in the selected geo. Service status is tracked on a per-region basis.

Azure status

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. Azure status provides you with a global view of the health of Azure services and regions. With Azure status, you can get information on service availability. Azure status is available to everyone to view all services that report their service health, as well as incidents with wide-ranging impact. If you're a current Azure user, however, we strongly encourage you to use the personalized experience in Azure Service Health. Azure Service Health includes all outages, upcoming planned maintenance activities, and service advisories. This experience was updated on July 25, The Azure status page gets updated in real time as the health of Azure services change.

Primetimer forums

Unbeknownst to us, this preview feature of the ARM CAE implementation contained a latent code defect that caused issues when authentication to Entra failed. Estimated completion: March We mitigated by making a configuration change to disable the feature. Note: During this incident, as a result of a delay in determining exactly which customer subscriptions were impacted, we chose to communicate via the public Azure Status page. Estimated completion: March How are we making incidents like this less likely or less impactful? Estimated completion: February We are improving monitoring signals on role crashes for reduced time spent on identifying the cause s , and for earlier detection of availability impact. During this incident, these services were unable to retrieve updated RBAC information and once the cached data expired these services failed, rejecting incoming requests in the absence of up-to-date access policies. Completed We are gradually rolling out a change to proceed with node restart when a tenant-specific call fails. Summary of Impact: Between and UTC on 07 Feb first occurrence , customers attempting to view their resources through the Azure Portal may have experienced latency and delays. Completed Our Key Vault team has fixed the code that resulted in applications crashing when they were unable to refresh their RBAC caches.

Note: During this incident, as a result of a delay in determining exactly which customer subscriptions were impacted, we chose to communicate via the public Azure Status page.

As described in our documentation, public PIR postings on this page are reserved for 'scenario 1' incidents - typically broadly impacting incidents across entire zones or regions, or even multiple zones or regions. How can we make our incident communications more useful? February Status History. What went wrong and why? Due to these ongoing node restarts and failed startups, ARM began experiencing a gradual loss in capacity to serve requests. Next Steps: We continue investigating to identify all contributing factors for these occurrences. In addition, several internal offerings depend on ARM to support on-demand capacity and configuration changes, leading to degradation and failure when ARM was unable to process their requests. Next Steps: We continue investigating to identify all contributing factors for these occurrences. Preliminary Root Cause: External reports alerted us to higher-than-expected latency and delays in the Azure Portal. From November 20, , this included RCAs for all issues about which we communicated publicly. This triggered the latent code defect and caused ARM nodes, which are designed to restart periodically, to fail repeatedly upon startup. We mitigated by making a configuration change to disable the feature.

And I have faced it.

In my opinion it is very interesting theme. Give with you we will communicate in PM.