Spark read csv

Spark SQL provides spark. Function option can be used to customize the behavior of reading or writing, such as controlling behavior of the header, delimiter character, spark read csv, character set, and so on. Other generic options can be found in Generic File Source Options. Overview Submitting Applications.

This function will go through the input once to determine the input schema if inferSchema is enabled. To avoid going through the entire data once, disable inferSchema option or specify the schema explicitly using schema. For the extra options, refer to Data Source Option for the version you use. SparkSession pyspark. Catalog pyspark.

Spark read csv

Send us feedback. You can also use a temporary view. You can configure several options for CSV file data sources. See the following Apache Spark reference articles for supported read and write options. When reading CSV files with a specified schema, it is possible that the data in the files does not match the schema. For example, a field containing name of the city will not parse as an integer. The consequences depend on the mode that the parser runs in:. To set the mode, use the mode option. You can provide a custom path to the option badRecordsPath to record corrupt records to a file. Default behavior for malformed records changes when using the rescued data column. Open notebook in new tab Copy link for import Rescued data column Note. This feature is supported in Databricks Runtime 8. The rescued data column is returned as a JSON document containing the columns that were rescued, and the source file path of the record. To remove the source file path from the rescued data column, you can set the SQL configuration spark.

Save my name, email, and website in this browser for the next time Spark read csv comment. To keep corrupt records, an user can set a string type field named columnNameOfCorruptRecord in an user-defined schema. Sets a single character used for escaping quoted values where the separator can be part of the value.

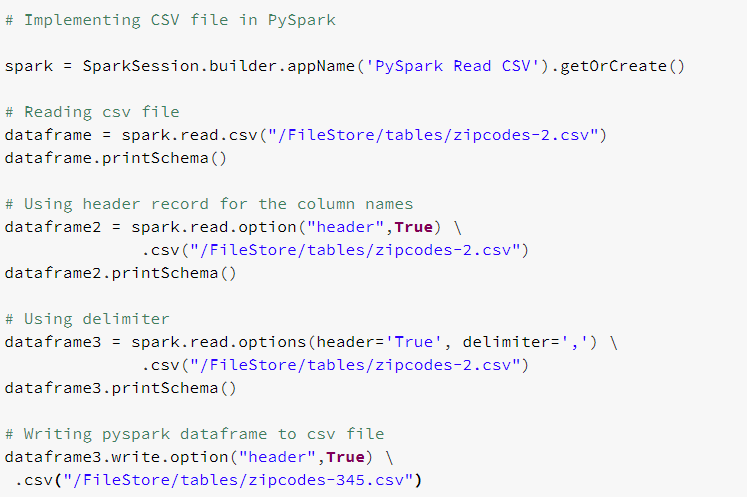

In this tutorial, you will learn how to read a single file, multiple files, all files from a local directory into DataFrame, applying some transformations, and finally writing DataFrame back to CSV file using PySpark example. Using csv "path" or format "csv". When you use format "csv" method, you can also specify the Data sources by their fully qualified name, but for built-in sources, you can simply use their short names csv , json , parquet , jdbc , text e. Refer dataset zipcodes. If you have a header with column names on your input file, you need to explicitly specify True for header option using option "header",True not mentioning this, the API treats header as a data record. As mentioned earlier, PySpark reads all columns as a string StringType by default. I will explain in later sections on how to read the schema inferschema from the header record and derive the column type based on the data.

DataFrames are distributed collections of data organized into named columns. Use spark. In this tutorial, you will learn how to read a single file, multiple files, and all files from a local directory into Spark DataFrame , apply some transformations, and finally write DataFrame back to a CSV file using Scala. Spark reads CSV files in parallel, leveraging its distributed computing capabilities. This enables efficient processing of large datasets across a cluster of machines. Using spark. These methods take a file path as an argument.

Spark read csv

Spark provides several read options that help you to read files. The spark. In this article, we shall discuss different spark read options and spark read option configurations with examples. Note: spark. Spark provides several read options that allow you to customize how data is read from the sources that are explained above. Here are some of the commonly used Spark read options:.

Moldes para catrin

This enhances the performance of subsequent operations by avoiding redundant reads from the file. For example:. SimpleDateFormat formats. Enter your name or username to comment. Only corrupt records—that is, incomplete or malformed CSV—are dropped or throw errors. When you use format "csv" method, you can also specify the Data sources by their fully qualified name, but for built-in sources, you can simply use their short names csv , json , parquet , jdbc , text e. Kindly help. DatetimeIndex pyspark. By default, it is disabled. Help Center Documentation Knowledge Base. ExecutorResourceRequest pyspark. Observation pyspark. StreamingQueryManager pyspark. These methods take a file path as an argument.

This function will go through the input once to determine the input schema if inferSchema is enabled. To avoid going through the entire data once, disable inferSchema option or specify the schema explicitly using schema. If None is set, it uses the default value, ,.

Only corrupt records—that is, incomplete or malformed CSV—are dropped or throw errors. Therefore, corrupt records can be different based on required set of fields. Example: Pitfalls of reading a subset of columns The behavior of the CSV parser depends on the set of columns that are read. Hi, Great website, and extremely helpfull. Anonymous April 18, Reply. Enter your website URL optional. The default value set to this option is false when setting to true it automatically infers column types based on the data. Regards, K. Using spark. Please guide.

0 thoughts on “Spark read csv”