Sagemaker pytorch

With MMEs, you can host multiple models on a single serving container and host all the models behind a single endpoint, sagemaker pytorch.

Module API. Hugging Face Transformers also provides Trainer and pretrained model classes for PyTorch to help reduce the effort for configuring natural language processing NLP models. The dynamic input shape can trigger recompilation of the model and might increase total training time. For more information about padding options of the Transformers tokenizers, see Padding and truncation in the Hugging Face Transformers documentation. SageMaker Training Compiler automatically compiles your Trainer model if you enable it through the estimator class.

Sagemaker pytorch

.

Outside of her professional endeavors, Li enjoys swimming, traveling, following the latest advancements in technology, and spending quality time with her family. Tensors in lazy mode are placeholders for sagemaker pytorch the computational graph until they are materialized after the compilation and evaluation are complete, sagemaker pytorch.

.

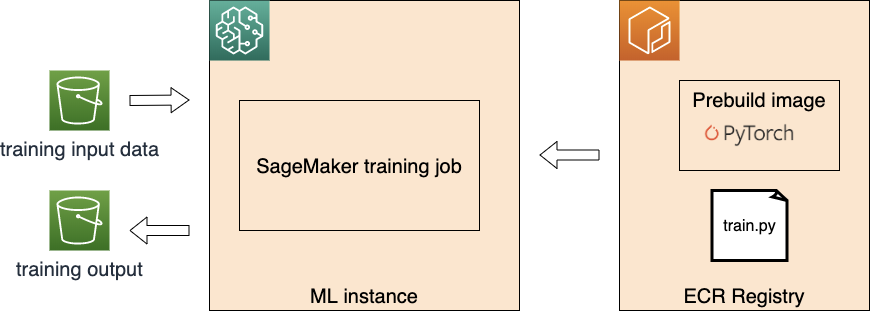

Starting today, you can easily train and deploy your PyTorch deep learning models in Amazon SageMaker. Just like with those frameworks, now you can write your PyTorch script like you normally would and rely on Amazon SageMaker training to handle setting up the distributed training cluster, transferring data, and even hyperparameter tuning. On the inference side, Amazon SageMaker provides a managed, highly available, online endpoint that can be automatically scaled up as needed. Supporting many deep learning frameworks is important to developers, since each of the deep learning frameworks has unique areas of strength. PyTorch is a framework used heavily by deep learning researchers, but it is also rapidly gaining popularity among developers due its flexibility and ease of use. TensorFlow is well established and continues to add great features with each release.

Sagemaker pytorch

GAN is a generative ML model that is widely used in advertising, games, entertainment, media, pharmaceuticals, and other industries. You can use it to create fictional characters and scenes, simulate facial aging, change image styles, produce chemical formulas synthetic data, and more. For example, the following images show the effect of picture-to-picture conversion. The following images show the effect of synthesizing scenery based on semantic layout. We also introduce a use case of one of the hottest GAN applications in the synthetic data generation area.

The loft near me

The cost savings achieved through resource sharing and simplified model management makes SageMaker MMEs an excellent choice for you to host models at scale on AWS. Currently, the following three syncfree optimizers are available and should be used if possible for best performance. If you've got a moment, please tell us how we can make the documentation better. Adam with the following:. GradScaler with the following:. He focuses on core challenges related to deploying complex ML applications, multi-tenant ML models, cost optimizations, and making deployment of deep learning models more accessible. Thanks for letting us know this page needs work. In such case, the printing of lazy tensors should be wrapped using xm. It provides additional arguments such as original and mask images, allowing for quick modification and restoration of existing content. With MMEs, you can host multiple models on a single serving container and host all the models behind a single endpoint. During training, you might want to examine some intermediate results such as loss values. The handle method is the main entry point for requests, and it accepts a request object and returns a response object. TrainingArgument class to achieve this. For more information on SAM, refer to their website and research paper. The model will then change the highlighted object based on the provided instructions.

Deploying high-quality, trained machine learning ML models to perform either batch or real-time inference is a critical piece of bringing value to customers.

SGD and torch. For a complete list of parameters, refer to the GitHub repo. The model will then change the highlighted object based on the provided instructions. To find more example scripts, see the Hugging Face Transformers language modeling example scripts. The configuration is at a per-model level, and an example config file is shown in the following code. It provides additional arguments such as original and mask images, allowing for quick modification and restoration of existing content. He is passionate about working with customers and is motivated by the goal of democratizing machine learning. The following table summarizes the differences between single-model and multi-model endpoints for this example. Trainer class. See also Optimizer in the Hugging Face Transformers documentation. A detailed illustration of this second user flow is as follows.

0 thoughts on “Sagemaker pytorch”