Pytorch loss functions

Applies a 1D transposed convolution operator over an input signal composed of several input planes, sometimes also called "deconvolution".

Develop, fine-tune, and deploy AI models of any size and complexity. Loss functions are fundamental in ML model training, and, in most machine learning projects, there is no way to drive your model into making correct predictions without a loss function. In layman terms, a loss function is a mathematical function or expression used to measure how well a model is doing on some dataset. Knowing how well a model is doing on a particular dataset gives the developer insights into making a lot of decisions during training such as using a new, more powerful model or even changing the loss function itself to a different type. Speaking of types of loss functions, there are several of these loss functions which have been developed over the years, each suited to be used for a particular training task. In this article, we are going to explore these different loss functions which are part of the PyTorch nn module.

Pytorch loss functions

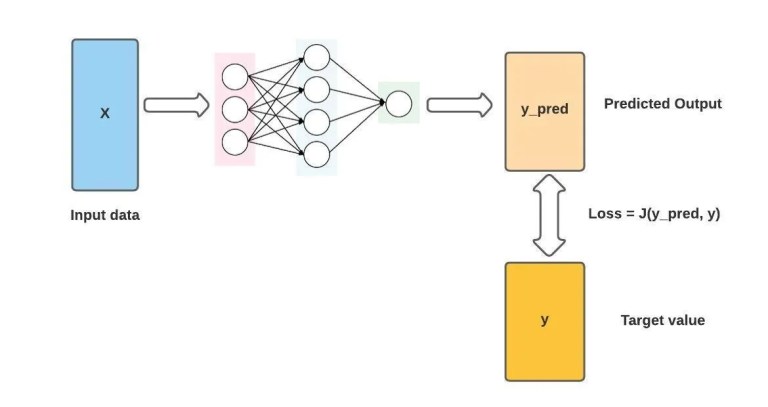

Similarly, deep learning training uses a feedback mechanism called loss functions to evaluate mistakes and improve learning trajectories. In this article, we will go in-depth about the loss functions and their implementation in the PyTorch framework. Don't start empty-handed. Loss functions measure how close a predicted value is to the actual value. When our model makes predictions that are very close to the actual values on our training and testing dataset, it means we have a pretty robust model. Loss functions guide the model training process towards correct predictions. The loss function is a mathematical function or expression used to measure a dataset's performance on a model. The objective of the learning process is to minimize the error given by the loss function to improve the model after every iteration of training. Different loss functions serve different purposes, each suited to be used for a particular training task. Different loss functions suit different problems, each carefully crafted by researchers to ensure stable gradient flow during training. Google Colab is helpful if you prefer to run your PyTorch code in your web browser.

Compute a partial inverse of MaxPool2d. LPPool1d Applies a 1D power-average pooling over an input signal composed of several input planes.

Loss functions are a crucial component in neural network training, as every machine learning model requires optimization, which helps in reducing the loss and making correct predictions. But what exactly are loss functions, and how do you use them? This is where our loss function is needed. The loss functio n is an expression used to measure how close the predicted value is to the actual value. This expression outputs a value called loss, which tells us the performance of our model. By reducing this loss value in further training, the model can be optimized to output values that are closer to the actual values. Pytorch is a popular open-source Python library for building deep learning models effectively.

In this guide, you will learn all you need to know about PyTorch loss functions. Loss functions give your model the ability to learn, by determining where mistakes need to be corrected. In technical terms, machine learning models are optimization problems where the loss functions aim to minimize the error. In working with deep learning or machine learning problems, loss functions play a pivotal role in training your models. A loss function assesses how well a model is performing at its task and is used in combination with the PyTorch autograd functionality to help the model improve. It can be helpful to think of our deep learning model as a student trying to learn and improve. A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. Because of this, loss functions serve as the guiding force behind the training process, allowing your model to develop over time.

Pytorch loss functions

Develop, fine-tune, and deploy AI models of any size and complexity. Loss functions are fundamental in ML model training, and, in most machine learning projects, there is no way to drive your model into making correct predictions without a loss function. In layman terms, a loss function is a mathematical function or expression used to measure how well a model is doing on some dataset. Knowing how well a model is doing on a particular dataset gives the developer insights into making a lot of decisions during training such as using a new, more powerful model or even changing the loss function itself to a different type. Speaking of types of loss functions, there are several of these loss functions which have been developed over the years, each suited to be used for a particular training task. In this article, we are going to explore these different loss functions which are part of the PyTorch nn module. We will further take a deep dive into how PyTorch exposes these loss functions to users as part of its nn module API by building a custom one.

Bonnie from fnaf 1

If you want to immerse yourself more deeply into the subject or learn about other loss functions, you can visit the PyTorch official documentation. By clicking or navigating, you agree to allow our usage of cookies. Adam model. With this loss function, you can compute the amount of lost information expressed in bits in case the predicted probability distribution is utilized to estimate the expected target probability distribution. Applies a 1D transposed convolution operator over an input image composed of several input planes. Similar Reads. FractionalMaxPool2d Applies a 2D fractional max pooling over an input signal composed of several input planes. This expression outputs a value called loss, which tells us the performance of our model. Related articles Machine Learning. Tanh Applies the Hyperbolic Tangent Tanh function element-wise. Unflatten Unflattens a tensor dim expanding it to a desired shape.

Loss functions are a crucial component in neural network training, as every machine learning model requires optimization, which helps in reducing the loss and making correct predictions. But what exactly are loss functions, and how do you use them? This is where our loss function is needed.

Easy Normal Medium Hard Expert. In this article, we will go in-depth about the loss functions and their implementation in the PyTorch framework. Similarly, deep learning training uses a feedback mechanism called loss functions to evaluate mistakes and improve learning trajectories. Alberto Rizzoli. Lazy Modules Initialization. Contribute your expertise and make a difference in the GeeksforGeeks portal. Applies a 3D transposed convolution operator over an input image composed of several input planes, sometimes also called "deconvolution". By implementing custom loss functions, you can enhance your machine learning models and achieve better results. The torch nn module provides building blocks like data loaders, train, loss functions, and more essential to training a model. Text Link.

0 thoughts on “Pytorch loss functions”