Pyspark withcolumn

It is a DataFrame transformation operation, meaning it returns a new DataFrame with the specified changes, without altering the original DataFrame, pyspark withcolumn.

The following example shows how to use this syntax in practice. Suppose we have the following PySpark DataFrame that contains information about points scored by basketball players on various teams:. For example, you can use the following syntax to create a new column named rating that returns 1 if the value in the points column is greater than 20 or the 0 otherwise:. We can see that the new rating column now contains either 0 or 1. Note : You can find the complete documentation for the PySpark withColumn function here. The following tutorials explain how to perform other common tasks in PySpark:.

Pyspark withcolumn

Returns a new DataFrame by adding multiple columns or replacing the existing columns that have the same names. The colsMap is a map of column name and column, the column must only refer to attributes supplied by this Dataset. It is an error to add columns that refer to some other Dataset. New in version 3. Currently, only a single map is supported. SparkSession pyspark. Catalog pyspark. DataFrame pyspark. Column pyspark. Observation pyspark. Row pyspark.

TaskResourceRequest pyspark. Read More.

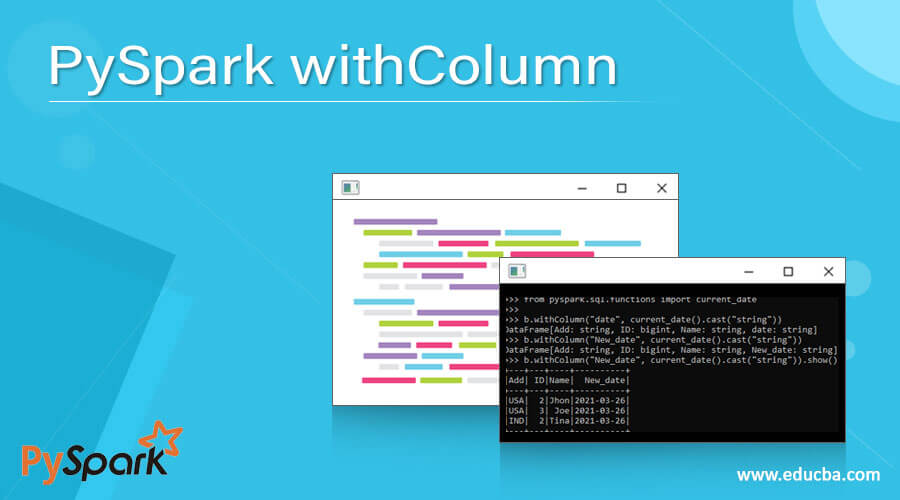

Project Library. Project Path. In PySpark, the withColumn function is widely used and defined as the transformation function of the DataFrame which is further used to change the value, convert the datatype of an existing column, create the new column etc. The PySpark withColumn on the DataFrame, the casting or changing the data type of the column can be done using the cast function. The PySpark withColumn function of DataFrame can also be used to change the value of an existing column by passing an existing column name as the first argument and the value to be assigned as the second argument to the withColumn function and the second argument should be the Column type. By passing the column name to the first argument of withColumn transformation function, a new column can be created. It was developed by The Apache Software Foundation.

How to apply a function to a column in PySpark? By using withColumn , sql , select you can apply a built-in function or custom function to a column. In order to apply a custom function, first you need to create a function and register the function as a UDF. PySpark withColumn is a transformation function that is used to apply a function to the column. The below example applies an upper function to column df. The select is used to select the columns from the PySpark DataFrame while selecting the columns you can also apply the function to a column. To run the SQL query use spark. This table would be available to use until you end your current SparkSession. PySpark UDF a. Note that UDFs are the most expensive operations hence use them only if you have no choice and when essential.

Pyspark withcolumn

To execute the PySpark withColumn function you must supply two arguments. The first argument is the name of the new or existing column. The second argument is the desired value to be used populate the first argument column. This value can be a constant value, a PySpark column, or a PySpark expression. This will become much more clear when reviewing the code examples below. Overall, the withColumn function is a convenient way to perform transformations on the data within a DataFrame and is widely used in PySpark applications. There are some alternatives and reasons not to use it as well which is covered in the Alternatives and When not to use sections below. Here is an example of how withColumn might be used to add a new column to a DataFrame:. Ok, just to take that previous example further and make it obvious. If you ever want to add a new column with a constant value, just follow the previous example.

Hq live cricket match

The PySpark withColumn function of DataFrame can also be used to change the value of an existing column by passing an existing column name as the first argument and the value to be assigned as the second argument to the withColumn function and the second argument should be the Column type. The goal of this spark project for students is to explore the features of Spark SQL in practice on the latest version of Spark i. Currently, only a single map is supported. SparkContext pyspark. TempTableAlreadyExistsException pyspark. UDFRegistration pyspark. Python Programming 3. BarrierTaskContext pyspark. Affine Transformation Iterators in Python — What are Iterators and Iterables? PySpark withColumn function of DataFrame can also be used to change the value of an existing column.

Pyspark withColumn function is useful in creating, transforming existing pyspark dataframe columns or changing the data type of column. In this article, we will see all the most common usages of withColumn function.

On below snippet, PySpark lit function is used to add a constant value to a DataFrame column. Save my name, email, and website in this browser for the next time I comment. QueryExecutionException pyspark. In this big data project, you will use Hadoop, Flume, Spark and Hive to process the Web Server logs dataset to glean more insights on the log data. I dont want to create a new dataframe if I am changing the datatype of existing dataframe. Catalog pyspark. The value of points in the second row is greater than 20, so the rating column returns Good. DStream pyspark. Spark Dataset provides both the type safety and object-oriented programming interface. Note that the second argument should be Column type. Row pyspark. The Spark Session is defined. Python Programming 3. Vectors Linear Algebra

Very valuable phrase

Rather amusing idea