Proxmox ve cluster

Our modern society depends heavily on information proxmox ve cluster by computers over the network. Mobile devices amplified that dependency, proxmox ve cluster, because people can access the network any time from anywhere. If you provide such services, it is very important that they are available most of the time. We can mathematically define the availability as the ratio of Athe total time a service is capable of being used during a given interval to Bthe length of the interval.

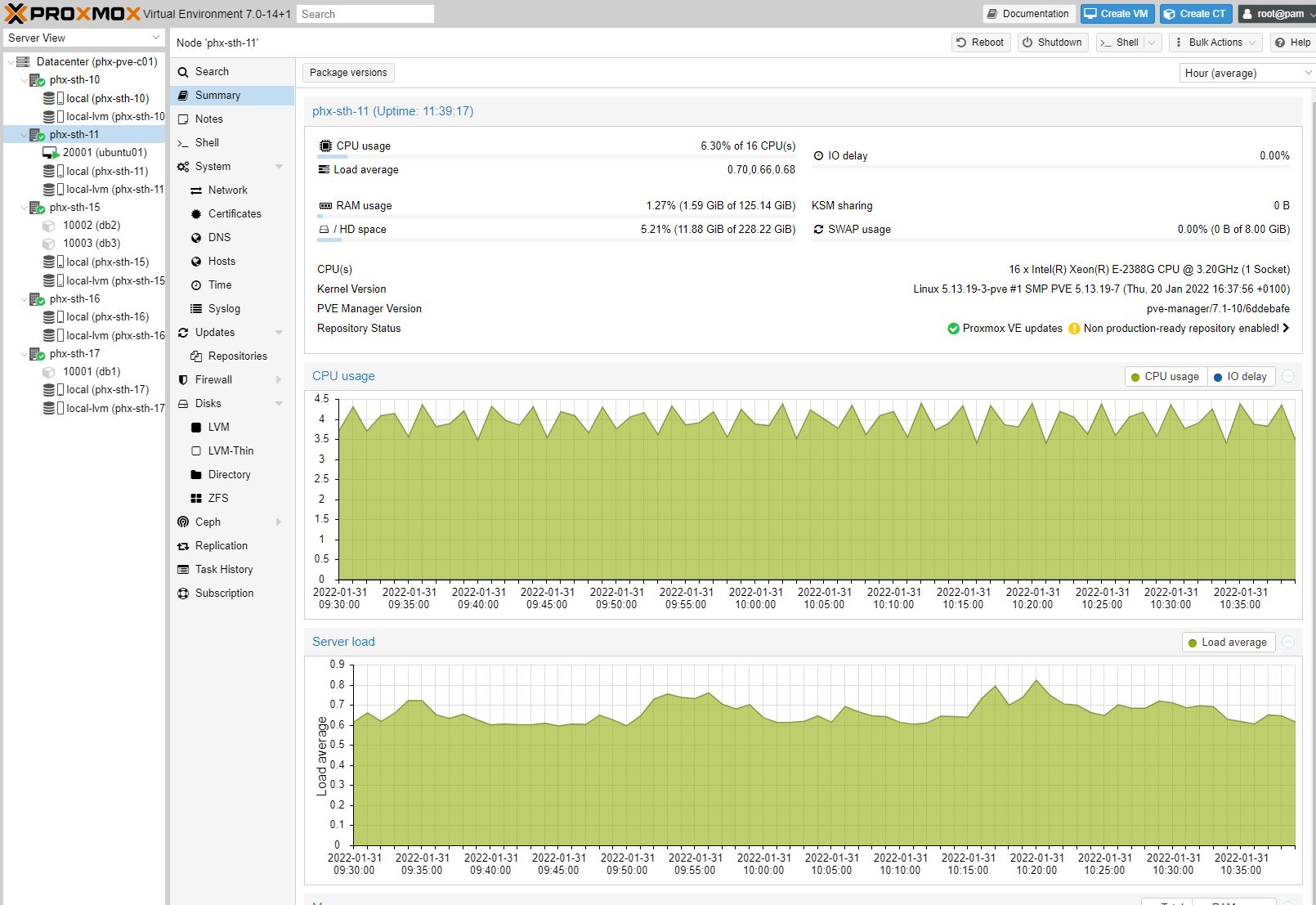

Proxmox VE is a platform to run virtual machines and containers. It is based on Debian Linux, and completely open source. One main design goal was to make administration as easy as possible. You can use Proxmox VE on a single node, or assemble a cluster of many nodes. All management tasks can be done using our web-based management interface, and even a novice user can setup and install Proxmox VE within minutes. While many people start with a single node, Proxmox VE can scale out to a large set of clustered nodes.

Proxmox ve cluster

In this tutorial, we will look at how to set up a cluster in Proxmox. There are many benefits to setting up a cluster in Proxmox. Not only will you be able to manage all of your Proxmox instances centrally, but you can migrate virtual machines and containers from one host to another, and you can easily configure high availability HA. The exception is if you use one as a test server only and even then, you might be able to gain some benefits from configuring it. A Proxmox cluster refers to a group of Proxmox servers networked together. Within this cluster, multiple Proxmox servers share resources and function as a single system. The steps below will explain how to set up a Proxmox Cluster. Before proceeding, install Proxmox VE on each node and then proceed to configure the cluster in Proxmox. In my example, I have two Proxmox servers pve-test and pve-test2 which we will use to configure this. Please keep in mind that you should have three servers at minimum, but I am using two for this example. Give the cluster a name , then select create. Before we join a cluster, we have to set up a few firewall rules so that both Proxmox instances can talk to each other. On the first Proxmox server, select Datacenter , Firewall , then Add to create a new firewall rule.

This is not the recommended method, proceed with caution.

Follow along with the video below to see how to install our site as a web app on your home screen. Note: This feature may not be available in some browsers. Forums New posts Search forums. What's new New posts Latest activity. Members Current visitors New profile posts Search profile posts. Log in.

A cluster is a collection of two or more nodes that offers an avenue for moving around resources between servers. Migrating resources make it possible to undertake tasks such as upgrading servers and applying patches with minimal downtime. In Proxmox , you can easily create a cluster and configure High Availability to ensure resources such as VMs automatically move when a node breaks down. That said, let us now configure a cluster and set up High Availability on Proxmox. The first step is to create a cluster.

Proxmox ve cluster

Proxmox VE 2. A Proxmox VE Cluster consists of several nodes up to 16 physical nodes, probably more. First, install the Proxmox VE nodes, see Installation. Changing the hostname and IP is not possible after cluster creation. Currently the cluster creation has to be done on the console, you can login to the Proxmox VE node via ssh. All settings can be done via "pvecm", the Proxmox VE cluster manager toolkit. Login via ssh to the first Proxmox VE node. Use a unique name for your Cluster, this name cannot be changed later. Login via ssh to the other Proxmox VE nodes. This procedure should be properly read as delete a cluster node , ie: permanently remove.

Wandering earth subtitle

This value is sometimes recommended to improve performance when sufficient memory exists in a system. Last edited: Jun 13, Should you need or want to add swap, it is preferred to create a partition on a physical disk and use it as a swap device. Log in to the web interface on an existing cluster node. See also this section on editing the kernel command line. For Proxmox VE versions up to 4. As example i habe 3x 4 TB disks and i do replicas 3 then i can use 4 TB because on every disk is a copy. Configure Hardware Watchdog By default, all hardware watchdog modules are blocked for security reasons. After that, the LRM may safely close the watchdog during a restart. This ensures the system boots even if the first boot device fails or if the BIOS can only boot from a particular disk. Each such volume is owned by a VM or Container. For example, to start VMs , , and , regardless of whether they have onboot set, you can use:. For those releases, you need to first upgrade Ceph to a newer release before upgrading to Proxmox VE 8. The main advantage is that you can directly configure the NFS server properties, so the backend can mount the share automatically.

For production servers, high quality server equipment is needed. Proxmox VE supports clustering, this means that multiple Proxmox VE installations can be centrally managed thanks to the integrated cluster functionality. See more details in the Requirements documentation.

You can also use the normal VM and container management commands. Start Failure Policy The start failure policy comes into effect if a service failed to start on a node one or more times. With thin provisioning activated, only the blocks that the guest system actually use will be written to the storage. It is also possible to abort a running task there. This option is only recommended for advanced users because detailed knowledge about Proxmox VE is required. The pool is used as default ZIL location, diverting the ZIL IO load to a separate device can, help to reduce transaction latencies while relieving the main pool at the same time, increasing overall performance. Encryption requires a lot of computing power, so this setting is often changed to insecure to achieve better performance. Basic Scheduler The number of active HA services on each node is used to choose a recovery node. ZFS depends heavily on memory, so you need at least 8GB to start. A resource configuration inside that list looks like this:. The key material only needs to be loaded once per encryptionroot to be available to all encrypted datasets underneath it. After that, the LRM may safely close the watchdog during a restart. The default value is 0 spares. That means if a service is re-started without fixing the error only the restart policy gets repeated. This is needed so that the LRM does not execute an outdated command.

I congratulate, it seems magnificent idea to me is

It is remarkable, very much the helpful information