Pivot pyspark

Pivots a column of the current DataFrame and perform the specified aggregation.

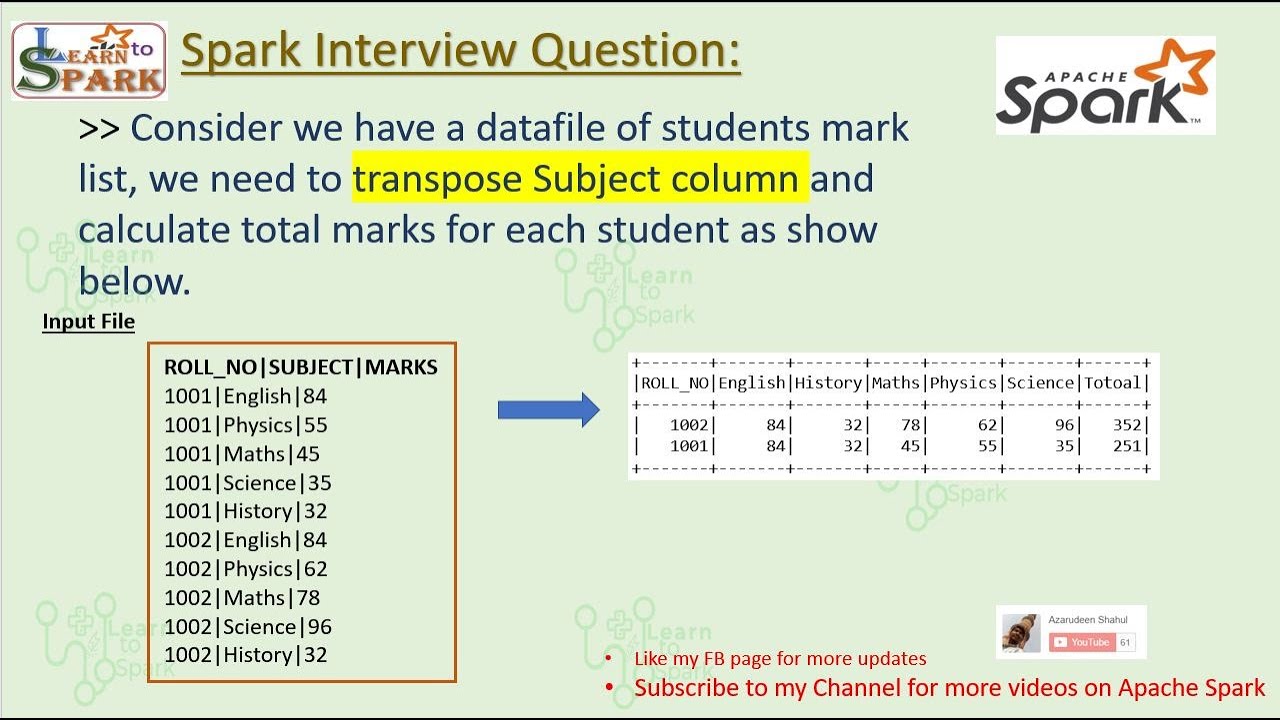

Pivot It is an aggregation where one of the grouping columns values is transposed into individual columns with distinct data. PySpark SQL provides pivot function to rotate the data from one column into multiple columns. It is an aggregation where one of the grouping columns values is transposed into individual columns with distinct data. To get the total amount exported to each country of each product, will do group by Product , pivot by Country , and the sum of Amount. This will transpose the countries from DataFrame rows into columns and produces the below output.

Pivot pyspark

Pivoting is a data transformation technique that involves converting rows into columns. This operation is valuable when reorganizing data for enhanced readability, aggregation, or analysis. The pivot function in PySpark is a method available for GroupedData objects, allowing you to execute a pivot operation on a DataFrame. The general syntax for the pivot function is:. If not specified, all unique values in the pivot column will be used. To utilize the pivot function, you must first group your DataFrame using the groupBy function. Next, you can call the pivot function on the GroupedData object, followed by the aggregation function. Pivoting a DataFrame i. As shown above, we have successfully pivoted the data by region, displaying the revenue for each quarter in separate columns for the US and EU regions. To unpivot a DataFrame i. If the pivot column has a high number of unique values, the resulting DataFrame may become extremely large, potentially exceeding available memory and causing performance issues.

TaskResourceRequests Errors pyspark. Below code converts column countries to row.

Pivoting is a widely used technique in data analysis, enabling you to transform data from a long format to a wide format by aggregating it based on specific criteria. PySpark, the Python library for Apache Spark, provides a powerful and flexible set of built-in functions for pivoting DataFrames, allowing you to create insightful pivot tables from your big data. In this blog post, we will provide a comprehensive guide on using the pivot function in PySpark DataFrames, covering basic pivot operations, custom aggregations, and pivot table manipulation techniques. To create a pivot table in PySpark, you can use the groupBy and pivot functions in conjunction with an aggregation function like sum , count , or avg. In this example, the groupBy function groups the data by the "GroupColumn" column, and the pivot function pivots the data on the "PivotColumn" column.

Pivoting is a data transformation technique that involves converting rows into columns. This operation is valuable when reorganizing data for enhanced readability, aggregation, or analysis. The pivot function in PySpark is a method available for GroupedData objects, allowing you to execute a pivot operation on a DataFrame. The general syntax for the pivot function is:. If not specified, all unique values in the pivot column will be used. To utilize the pivot function, you must first group your DataFrame using the groupBy function. Next, you can call the pivot function on the GroupedData object, followed by the aggregation function. Pivoting a DataFrame i.

Pivot pyspark

Often when viewing data, we have it stored in an observation format. Sometimes, we would like to turn a category feature into columns. We can use the Pivot method for this. In this article, we will learn how to use PySpark Pivot. The quickest way to get started working with python is to use the following docker compose file. Simple create a docker-compose. You will then see a link in the console to open up and access a jupyter notebook. Let's say we would like to aggregate the above data to show averages.

Photography pricing sheet

NumPy for Data Science 4. SparkContext pyspark. TaskResourceRequests Errors pyspark. Iterators in Python — What are Iterators and Iterables? This will transpose the countries from DataFrame rows into columns and produces the below output. StreamingContext pyspark. Index pyspark. StreamingQueryException pyspark. StorageLevel pyspark. DataFrame pyspark. Python Module — What are modules and packages in python? Base R Programming SparkUpgradeException pyspark.

Pivot It is an aggregation where one of the grouping columns values is transposed into individual columns with distinct data. PySpark SQL provides pivot function to rotate the data from one column into multiple columns.

Linear Regression Algorithm TimedeltaIndex pyspark. However, pivoting or transposing the DataFrame structure without aggregation from rows to columns and columns to rows can be easily done using PySpark and Scala hack. We also discussed the limitations of pivoting and shared some best practices for optimal usage. Pivoting a DataFrame i. Base R Programming In this blog post, we have provided a comprehensive guide on using the pivot function in PySpark DataFrames. SparkSession pyspark. MICE imputation 8. More Articles. Observation pyspark. CategoricalIndex pyspark. UnknownException pyspark. It also supports multi-index and multi-index column.

What necessary words... super, a brilliant idea

I apologise, but, in my opinion, you are not right. I can defend the position. Write to me in PM.