Pandas to spark

Sometimes we will get csv, xlsx, etc.

This is a short introduction to pandas API on Spark, geared mainly for new users. This notebook shows you some key differences between pandas and pandas API on Spark. Creating a pandas-on-Spark Series by passing a list of values, letting pandas API on Spark create a default integer index:. Creating a pandas-on-Spark DataFrame by passing a dict of objects that can be converted to series-like. Having specific dtypes. Types that are common to both Spark and pandas are currently supported.

Pandas to spark

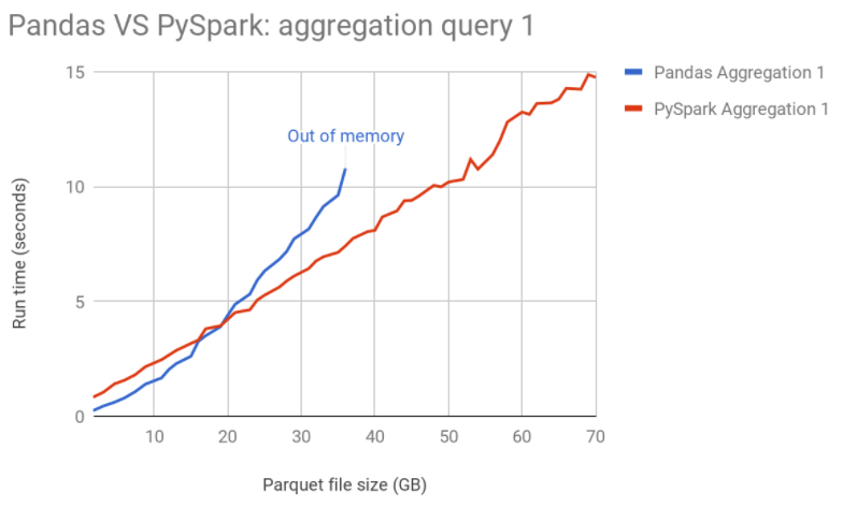

As a data scientist or software engineer, you may often find yourself working with large datasets that require distributed computing. Apache Spark is a powerful distributed computing framework that can handle big data processing tasks efficiently. We will assume that you have a basic understanding of Python , Pandas, and Spark. A Pandas DataFrame is a two-dimensional table-like data structure that is used to store and manipulate data in Python. It is similar to a spreadsheet or a SQL table and consists of rows and columns. You can perform various operations on a Pandas DataFrame, such as filtering, grouping, and aggregation. A Spark DataFrame is a distributed collection of data organized into named columns. It is similar to a Pandas DataFrame but is designed to handle big data processing tasks efficiently. Scalability : Pandas is designed to work on a single machine and may not be able to handle large datasets efficiently. Spark, on the other hand, can distribute the workload across multiple machines, making it ideal for big data processing tasks.

Change Language.

To use pandas you have to import it first using import pandas as pd. Operations on Pyspark run faster than Python pandas due to its distributed nature and parallel execution on multiple cores and machines. In other words, pandas run operations on a single node whereas PySpark runs on multiple machines. PySpark processes operations many times faster than pandas. If you want all data types to String use spark. You need to enable to use of Arrow as this is disabled by default and have Apache Arrow PyArrow install on all Spark cluster nodes using pip install pyspark[sql] or by directly downloading from Apache Arrow for Python. You need to have Spark compatible Apache Arrow installed to use the above statement, In case you have not installed Apache Arrow you get the below error.

Sometimes we will get csv, xlsx, etc. For conversion, we pass the Pandas dataframe into the CreateDataFrame method. Example 1: Create a DataFrame and then Convert using spark. Example 2: Create a DataFrame and then Convert using spark. The dataset used here is heart. We can also convert pyspark Dataframe to pandas Dataframe. For this, we will use DataFrame. Skip to content.

Pandas to spark

To use pandas you have to import it first using import pandas as pd. Operations on Pyspark run faster than Python pandas due to its distributed nature and parallel execution on multiple cores and machines. In other words, pandas run operations on a single node whereas PySpark runs on multiple machines. PySpark processes operations many times faster than pandas. If you want all data types to String use spark. You need to enable to use of Arrow as this is disabled by default and have Apache Arrow PyArrow install on all Spark cluster nodes using pip install pyspark[sql] or by directly downloading from Apache Arrow for Python. You need to have Spark compatible Apache Arrow installed to use the above statement, In case you have not installed Apache Arrow you get the below error.

Family guy brian and stewie

This notebook shows you some key differences between pandas and pandas API on Spark. Updated on: Apr You will be notified via email once the article is available for improvement. Building the SparkSession and name. Syntax: spark. Solve Coding Problems. Help Center Documentation Knowledge Base. Contribute your expertise and make a difference in the GeeksforGeeks portal. Report issue Report. As a data scientist or software engineer, you may often find yourself working with large datasets that require distributed computing. Suggest Changes. Integration : Spark integrates seamlessly with other big data technologies, such as Hadoop and Kafka, making it a popular choice for big data processing tasks. Help us improve.

You can jump into the next section if you already knew this. Python pandas is the most popular open-source library in the Python programming language, it runs on a single machine and is single-threaded. Pandas is a widely used and defacto framework for data science, data analysis, and machine learning applications.

In this article, you have learned how easy to convert pandas to Spark DataFrame and optimize the conversion using Apache Arrow in-memory columnar format. This is a short introduction to pandas API on Spark, geared mainly for new users. The natural order can be preserved by setting compute. Create the DataFrame with the help. Participate in Three 90 Challenge! Explore offer now. Please go through our recently updated Improvement Guidelines before submitting any improvements. How to convert list of dictionaries into Pyspark DataFrame? Create Improvement. Using the Arrow optimizations produces the same results as when Arrow is not enabled. For this, we will use DataFrame.

0 thoughts on “Pandas to spark”