Flink keyby

Operators transform one or more DataStreams into a new DataStream.

In this section you will learn about the APIs that Flink provides for writing stateful programs. Please take a look at Stateful Stream Processing to learn about the concepts behind stateful stream processing. If you want to use keyed state, you first need to specify a key on a DataStream that should be used to partition the state and also the records in the stream themselves. This will yield a KeyedStream , which then allows operations that use keyed state. A key selector function takes a single record as input and returns the key for that record. The key can be of any type and must be derived from deterministic computations.

Flink keyby

This article explains the basic concepts, installation, and deployment process of Flink. The definition of stream processing may vary. Conceptually, stream processing and batch processing are two sides of the same coin. Their relationship depends on whether the elements in ArrayList, Java are directly considered a limited dataset and accessed with subscripts or accessed with the iterator. Figure 1. On the left is a coin classifier. We can describe a coin classifier as a stream processing system. In advance, all components used for coin classification connect in series. Coins continuously enter the system and output to different queues for future use. The same is true for the picture on the right.

Broadcast: An upstream operator sends each record to all instances of the downstream operator. In this section you will learn about flink keyby APIs that Flink provides for writing stateful programs.

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies. Takes one element and produces one element. A map function that doubles the values of the input stream:. Takes one element and produces zero, one, or more elements. A flatmap function that splits sentences to words:. Evaluates a boolean function for each element and retains those for which the function returns true.

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies. Takes one element and produces one element. A map function that doubles the values of the input stream:. Takes one element and produces zero, one, or more elements. A flatmap function that splits sentences to words:. Evaluates a boolean function for each element and retains those for which the function returns true. A filter that filters out zero values:. Logically partitions a stream into disjoint partitions.

Flink keyby

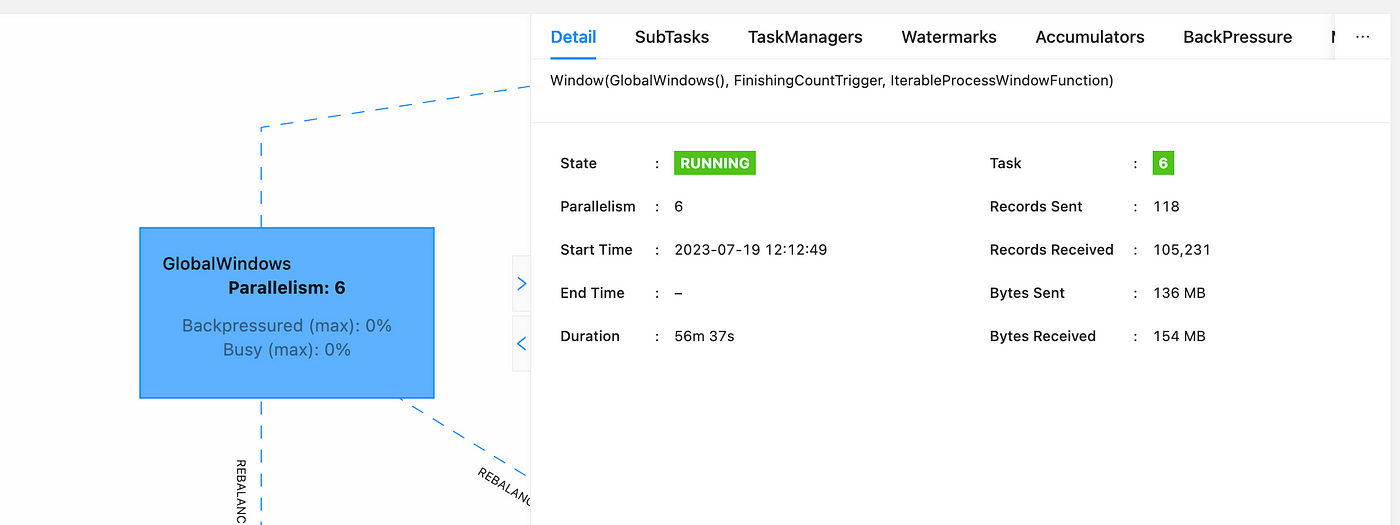

Flink uses a concept called windows to divide a potentially infinite DataStream into finite slices based on the timestamps of elements or other criteria. This division is required when working with infinite streams of data and performing transformations that aggregate elements. Info We will mostly talk about keyed windowing here, i. Keyed windows have the advantage that elements are subdivided based on both window and key before being given to a user function. The work can thus be distributed across the cluster because the elements for different keys can be processed independently.

Shenhe rule.34

The description of a job vertex is constructed based on the description of operators in it. In other words, these objects are the finest granularity at which non-keyed state can be redistributed. The entire computational logic graph is built on the basis of calling different operations on the DataStream object to generate new DataStream objects. Broadcast: An upstream operator sends each record to all instances of the downstream operator. After splitting data with KeyBy, each subsequent operator instance can process the data corresponding to a specific Key set. If you want to use keyed state, you first need to specify a key on a DataStream that should be used to partition the state and also the records in the stream themselves. The traversed state entries are checked and expired ones are cleaned up. The RocksDB state backend adds 8 bytes per stored value, list entry or map entry. It abstracts data streams into an infinite set, defines a group of operations on the set, and then automatically builds the corresponding DAG graph at the bottom layer. A map function that doubles the values of the input stream:.

In the first article of the series, we gave a high-level description of the objectives and required functionality of a Fraud Detection engine. We also described how to make data partitioning in Apache Flink customizable based on modifiable rules instead of using a hardcoded KeysExtractor implementation.

There are different schemes for doing this redistribution. This would require only local data transfers instead of transferring data over network, depending on other configuration values such as the number of slots of TaskManagers. Use a HashMap to maintain the current transaction volume of each item type. In this case, the type of information also needs to be explicitly specified. There are different ways to specify keys. Assuming that there is a data source that monitors orders in the system. There the ListState is cleared of all objects included by the previous checkpoint, and is then filled with the new ones we want to checkpoint. Java keyedStream. Figure 8. A filter that filters out zero values:. Evaluates a boolean function for each element and retains those for which the function returns true. Rebalance: An upstream operator sends data on a round-robin basis. Then, to count the transaction volume of each item type, use KeyBy to group the input streams through the first field item type of Tuple and then sum the values in the second field transaction volume of the record corresponding to each Key. Begin a new chain, starting with this operator.

I consider, that you are mistaken. Let's discuss it. Write to me in PM.

I consider, that you commit an error. I can prove it. Write to me in PM, we will communicate.