Dask dtypes

Have a question about this project? Sign up for a free GitHub account to open an dask dtypes and contact its maintainers and the community. Already on GitHub?

Hello team, I am trying to use parquet to store DataFrame with vector column. My code looks like:. It looks like Dask incorrectly assumes list float to be a string, and converts it automatically. The dtype of df looks correct, but this is misleading. It just returns what you specified as meta previously.

Dask dtypes

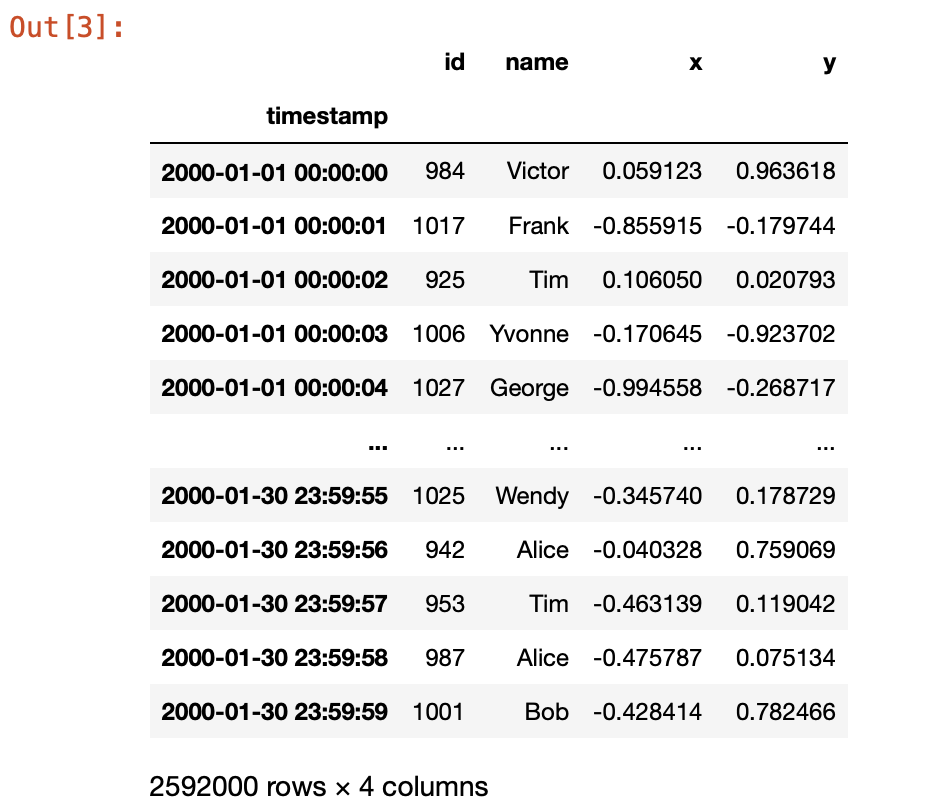

Dask is a useful framework for parallel processing in Python. If you already have some knowledge of Pandas or a similar data processing library, then this short introduction to Dask fundamentals is for you. Specifically, we'll focus on some of the lower level Dask APIs. Understanding these is crucial to understanding common errors and performance issues you'll encounter when using the high-level APIs of Dask. To follow along, you should have Dask installed and a notebook environment like Jupyter Notebook running. We'll start with a short overview of the high-level interfaces. This looks similar to a Pandas dataframe, but there are no values in the table. Notice how the variable is called ddf. This stands for d ask d ata f rame. It's a useful convention to use this instead of df — common when dealing with Pandas dataframes — so you can easily distinguish them. In general, you'll see lazy computing applied whenever you call a method on a Dask collection. Computation is not triggered at the time you call the method.

The number of partitions depends on the value of the blocksize argument. Tackling unmanaged memory with Dask.

Columns in Dask DataFrames are typed, which means they can only hold certain values e. This post gives an overview of DataFrame datatypes dtypes , explains how to set dtypes when reading data, and shows how to change column types. Using column types that require less memory can be a great way to speed up your workflows. Properly setting dtypes when reading files is sometimes needed for your code to run without error. Create a pandas DataFrame and print the dtypes. All code snippets in this post are from this notebook. Change the nums column to int8.

Dask makes it easy to read a small file into a Dask DataFrame. Suppose you have a dogs. For a single small file, Dask may be overkill and you can probably just use pandas. Dask starts to gain a competitive advantage when dealing with large CSV files. Rule-of-thumb for working with pandas is to have at least 5x the size of your dataset as available RAM. Use Dask whenever you exceed this limit. Dask DataFrames are composed of multiple partitions, each of which is a pandas DataFrame. Dask intentionally splits up the data into multiple pandas DataFrames so operations can be performed on multiple slices of the data in parallel. See the coiled-datasets repo for more information about accessing sample datasets. The number of partitions depends on the value of the blocksize argument.

Dask dtypes

You can run this notebook in a live session or view it on Github. At its core, the dask. One operation on a Dask DataFrame triggers many pandas operations on the constituent pandas DataFrame s in a way that is mindful of potential parallelism and memory constraints.

Trainer attack on titan 2

What we see here is a Task graph. The 2 is passed in as the second value for each value. The assign operation in Dask completed in 0. I let this computation run for 30 minutes before canceling the query. This means that our Dask graphs can consume a lot of memory. This post gives an overview of DataFrame datatypes dtypes , explains how to set dtypes when reading data, and shows how to change column types. Subscribe to our monthly newsletter for all the latest and greatest updates. To solve this, we can use dask! In this tutorial, we will use dask. In this section we do a few dask. ArrowDtype pa. Dask constructs the DAG from the Delayed objects we looked at above.

Basic Examples. Machine Learning. User Surveys.

As with Dask dataframes, our array doesn't contain any concrete values until we call compute on it, but we can see some relevant metadata in its string representation. Simple enough, right? Enterprise Dask Support. Dask can decide later on the best place to run the actual computation. These dtype inference problems are common when using CSV files. The dtype of df looks correct, but this is misleading. Now we see the five subgraphs, each representing a power operation. Rule-of-thumb for working with pandas is to have at least 5x the size of your dataset as available RAM. A very simple first test might be something like the following:. Let's start by trying to get the shape of our data.

In it something is. Clearly, thanks for the help in this question.