Cuda cores meaning

CUDA is a software layer that gives direct access to the GPU's virtual instruction set and parallel computational elements for the execution of compute kernels. This accessibility makes it easier for specialists in parallel programming cuda cores meaning use GPU resources, cuda cores meaning, in contrast to prior APIs like Direct3D and OpenGLwhich required advanced skills in graphics programming. CUDA was created by Nvidia. The graphics processing unit GPUas a specialized computer processor, addresses the demands of real-time high-resolution 3D graphics compute-intensive tasks.

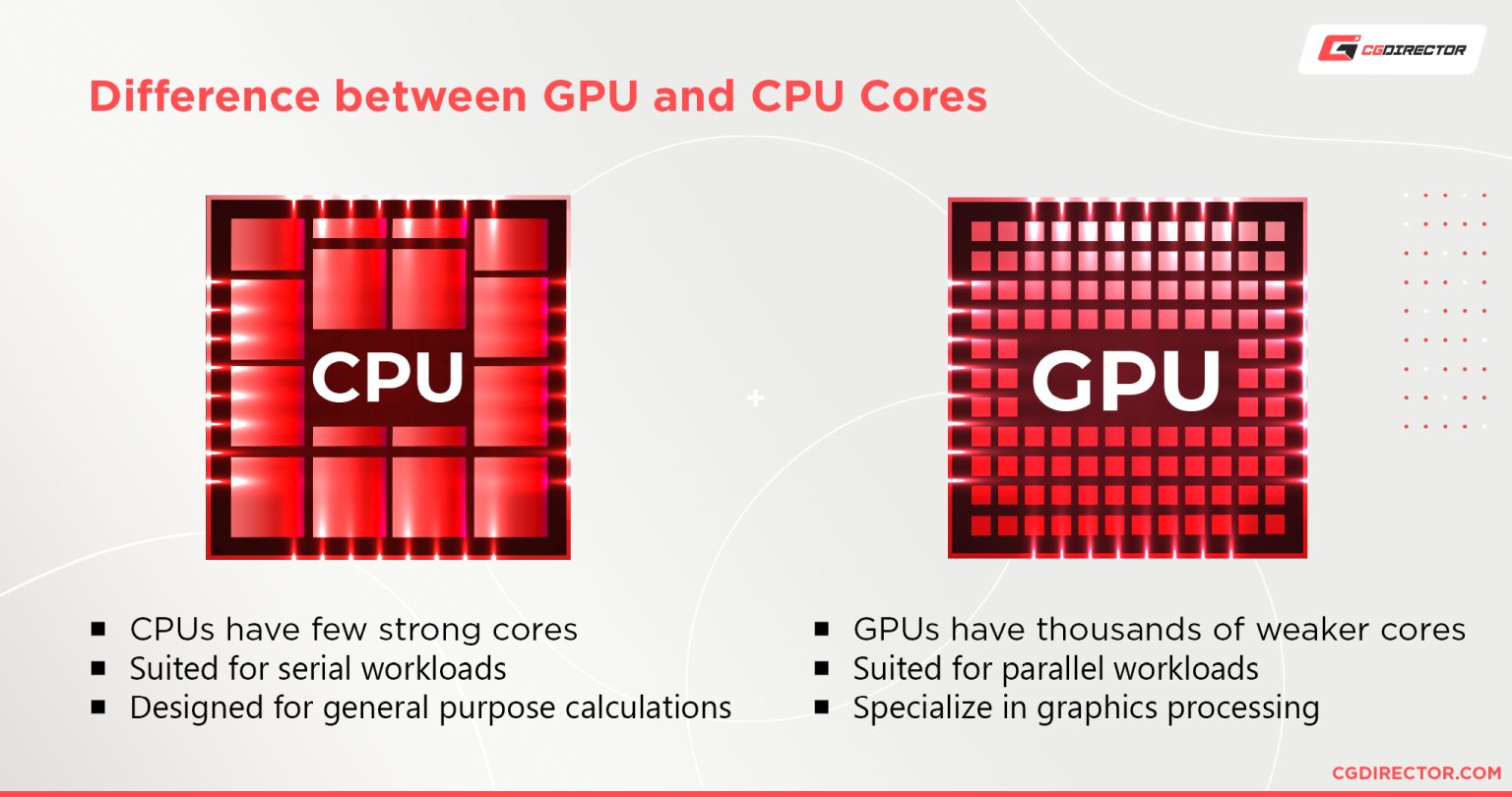

But what are they? How are they different from regular CPU cores? First introduced in , they have since become an important part of high-performance computing. In this blog, we will explain what CUDA cores are and how they differ from other types of cores. We will also discuss the advantages of using CUDA cores and ways to employ them for accelerating performance. In short, these are special types of cores that are designed to speed up certain types of calculations, particularly those that are needed for graphics processing. GPUs with lots of CUDA cores can perform certain types of complex calculations much faster than those with fewer cores.

Cuda cores meaning

But as datasets increase in volume, complexity and cross-relationships, the demand for processing power also surges exponentially. It is, therefore, even more inherently dependent on ingesting massive volumes of data to feed the model. Traditional CPUs cannot handle such massive data workloads, nor can they deliver the computational power for ML model training. As a consequence of not possessing the requisite processing power, often the entire system lags and grinds to a screeching halt. This article will give a complete walkthrough of the impact of GPU accelerated analytics platforms on ML. We will also gather insights into the differences between CUDA and Tensor cores and determine which one is the best fit for ML undertakings. But first…. Thanks to their ultra-efficient parallel processing capabilities, GPUs have emerged as the best option for massive-scale data processing and ML model training. GPU manufacturers like Nvidia, AMD and Intel are perennially locked in an innovation race to design the most optimum devices for accurately performing floating-point arithmetic operations, delivering superfast 3D visual processing, and undertaking error-free number crunching, among other functions. In the next section, we will discuss these different types of cores separately and how you can use GPU computing for big data.

GPU cloud computing is becoming more and more popular as we move towards more data-intensive tasks like machine learning and big data analysis. ISSN X.

Trusted Reviews is supported by its audience. If you purchase through links on our site, we may earn a commission. Learn more. On the lookout for a new GPU and not totally sure what you should be looking for? It will work with most operating systems.

Speed up graphics-intensive processes with Compute Unified Device Architecture. This streamlining takes advantage of parallel computing in which thousands of tasks, or threads, are executed simultaneously. Nvidia CUDA cores are parallel processors similar to a processor in a computer, which may be a dual or quad-core processor. Nvidia GPUs, however, can have several thousand cores. When shopping for an Nvidia video card , you may see a reference to the number of CUDA cores contained in a card. Cores are responsible for various tasks related to the speed and power of the GPU. Since CUDA cores are responsible for dealing with the data that moves through a GPU, cores often handle video game graphics in situations where characters and scenery are loading.

Cuda cores meaning

CUDA enables developers to speed up compute-intensive applications by harnessing the power of GPUs for the parallelizable part of the computation. By , you could buy a 3D graphics accelerator from 3dfx so that you could run the first-person shooter game Quake at full speed. At the time, the principal reason for having a GPU was for gaming. In , a team of researchers led by Ian Buck unveiled Brook, the first widely adopted programming model to extend C with data-parallel constructs. The V not shown in this figure is another 3x faster for some loads so up to x CPUs , and the A also not shown is another 2x faster up to x CPUs. Note that not everyone reports the same speed boosts, and that there has been improvement in the software for model training on CPUs, for example using the Intel Math Kernel Library. In addition, there has been improvement in CPUs themselves, mostly to provide more cores. The speed boost from GPUs has come in the nick of time for high-performance computing. A more comprehensive list includes:. Deep learning has an outsized need for computing speed.

Amazing grace perfume

Get Your Free Trial. Nolan Foster. Foster offers expert consultations for empowering cloud infrastructure with customized solutions and comprehensive managed security. Foster offers expert consultations for empowering cloud infrastructure with customized solutions and comprehensive managed security. Architecture specifications Compute capability version 1. You can rely on our vast network of data centers to meet all of your business requirements. ISSN X. Specs, performance and features detailed. Max number of new instructions issued each cycle by a single scheduler [97]. Retrieved May 16,

She's a computer science graduate and has been writing about design, creativity and technology. It may or may not be necessary in a deep learning framework.

In other projects. Stream processing Dataflow programming Models Implicit parallelism Explicit parallelism Concurrency Non-blocking algorithm. Already have an account? Editorial independence Editorial independence means being able to give an unbiased verdict about a product or company, with the avoidance of conflicts of interest. Retrieved May 17, Retrieved November 18, Read Disclaimer. PMC Hidden categories: Articles with short description Short description matches Wikidata Wikipedia articles with style issues from February All articles with style issues Articles containing pro and con lists Articles with multiple maintenance issues All articles with vague or ambiguous time Vague or ambiguous time from December Wikipedia articles in need of updating from December All Wikipedia articles in need of updating All articles with unsourced statements Articles with unsourced statements from May Articles with GND identifiers Articles with J9U identifiers Articles with LCCN identifiers. You might like.

I am final, I am sorry, but it not absolutely approaches me. Perhaps there are still variants?

On your place I would ask the help for users of this forum.

I apologise, but, in my opinion, you are not right. Let's discuss. Write to me in PM, we will communicate.